Real-Time Scoring & Model Management 1 - Life Cycle

Lately, I have come across an increasing number of discussions on the need for large-scale real-time scoring and model management. As a result, I thought it would be a good idea to write about this. I wanted to answer the question: Can we implement a large-scale real-time scoring engine, coupled with model management, using the technologies available in the 10gR2 Oracle Database? To find out the answers, keep on reading. In this series (Part 1, Part 2, and Part 3) I describe how this can be done and give some performance numbers.

Typical examples of large-scale real-time scoring applications include call center service dispatch and cross-sell of financial products. These applications have in common the need for:

- Scoring many models in real-time

- Filtering models to a relevant set suitable for scoring a particular case

- Managing models

Model Life Cycle

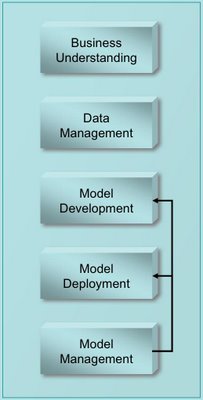

In production data mining, where data mining models are deployed into production systems, models commonly follow a life cycle with five major phases (see Figure 1):

- Business understanding

- Data management

- Model development

- Model deployment

- Model management

Figure 1: Production data mining model life cycle.

Business UnderstandingThis initial phase focuses on identifying the application objectives and requirements from a business perspective. Based on that, the type of models needed are determined. For example, an application may have as an objective to cross-sell products to customers calling the company's call center. In order to support this objective, the system designer may create a model for each product that predicts the likelihood of a customer buying the product.

Data Management

The data management phase includes identifying the data necessary for building and scoring models. Data can come from a single table or view or from multiple tables or views. It is also common to create computed columns (e.g., aggregated values) to be used as input to models.

Model Development

Model development includes selecting the appropriate algorithm, preparing the data to meet the needs of the selected algorithm, and building and testing models for their predictive power. Sometimes, it may also involve sampling the data. Typical transformations include: outlier removal, missing value replacement, binning, normalization, and applying power transformations to handle heavily skewed data.

In the majority of cases, the transformations used during model build need to be deployed along side with the model in the deployment phase. Because in the database transformations can be easily implemented as SQL statements, this implies that, at scoring time, we need to craft scoring SQL queries that include the required transformations. This task is greatly simplified with the help of the Oracle Data Miner GUI.

Model Deployment

Model deployment requires moving the model and necessary transformations to the production system. For many data mining platforms, model deployment can be a labor-intensive task. Because data mining models are database objects and the data transformations are implemented using SQL, this is not the case for Oracle Data Mining. If the model was developed in the production instance of the database then, unlike external data mining tools, it is already part of the production system. Otherwise, a more common case, model deployment is performed using import/export or the Data Pump utilities. The process can be done manually or can be automated. The deployment can also be scheduled to take place on a periodic basis. The actual model scoring is implemented using SQL queries or Java calls depending on the application.

IT policy and internal audit requirements may require testing of models prior to deployment to the production environment. This type of testing is independent of the testing for model predictive power performed during model development.

Model Management

Usually, model management implies periodic creation of models and replacement of current ones in a automatic or semi-automatic fashion. In this sense, model management is tightly coupled with model development and deployment steps. It can be seen as an outer-loop controlling these steps. When dealing with large numbers of models, a model management component, responsible for automating the creation and deployment of models, becomes a necessity. A useful approach for implementing model management is the champion/challenger testing strategy. In a nutshell, champion/challenger testing is a systematic, empirical method of comparing the performance of a production model (the champion) against that of new models built on more recent data (the challengers). If a challenger model outperforms the champion model, it becomes the new champion and is deployed in the production system. Challenger models are built periodically as new data are made available.

Another dimension of model management is the creation and management of metadata about models. Model metadata can be used to dynamically select, for scoring, models appropriate to answer a given question. This is a key element in scaling up large-scale real-time scoring systems.

Consider that we have built a set of models to predict house value for each major housing market. This is a sensible strategy as a national model would be too generic. This set of models could then be used in an application for predicting the value of a property based on the house attributes. At scoring time, the application would select the model for the correct housing market. In order to accomplish this, we need metadata that associates different models with a task (e.g., predict house value) as well as other attributes that can be used to segment the models (e.g., region, product type, etc.).

The next posts in this series will describe how to implement a large-scale real-time scoring engine with model management and give performance numbers for the system. The full series is Part 1, Part 2, and Part 3.

Readings: Business intelligence, Data mining, Oracle analytics

Labels: Model Management, Real-Time

Marcos,

The DM model life cycle diagram needs to address the premises on which is it based, whether short term implementation or for the long term as right now it does close the cycle for feedback.

Thanks

Shyam

Posted by Shyam |

2/21/2006 03:11:00 PM

Shyam |

2/21/2006 03:11:00 PM

Shyam,

Good point. The diagram closes the loop from a short to medium timeframe point of view. That is, it assumes that the business understanding and the data management are stable. This is usually the case for long periods of time in the life time of a system. However, periodically, on a longer timeframe, the goals and overall architecture of the system can be revised. In this case, the close loop would extend back to the Business Understanding step. This type of feedback is captured in the CRISP-DM flow diagram. These two types of feedback operate at different time scales. As my focus was more on the operational feedback provided by model management, I did not represent the longer loop in the diagram.

Posted by Marcos |

2/21/2006 04:48:00 PM

Marcos |

2/21/2006 04:48:00 PM

This comment has been removed by a blog administrator.

Posted by Anonymous |

11/19/2009 03:20:00 AM

Anonymous |

11/19/2009 03:20:00 AM